Facebook and Its Religion of Growth

by Taylor Lange

There was a time when I dreamt of working at Facebook. I was less intrigued by the software development side than with studying the exchange of information and the cultural evolution occurring through online social networks. One of my research interests is how individuals learn to act cooperatively and acquire new preferences. What better place is there to do that than at the largest online social media platform?

Since joining Facebook in 2009, I’ve experienced Facebook’s byzantine construction first hand, from the infinite news feed and pirate speak, to the seemingly 50,000 times they’ve changed the layout of the home screen. The 2.8 billion other users and I have tolerated the seemingly superfluous changes just to keep up with people we only interact with through “likes” and the occasional comment. This habitual use by long-term users has fueled the growth of Facebook’s platform from a website intended to help Ivy League computer geeks meet women to a multi-purpose social media monopoly with a trillion-dollar valuation.

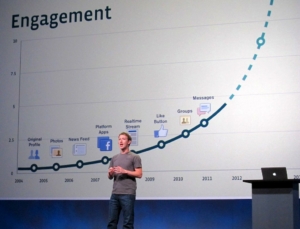

Such growth reflects the ethos driving Facebook. As one employee describes their orientation experience: “We believe in the religion of growth.” Facebook’s framework provides a fascinating and terrifying example of what happens when unhindered growth is the goal.

Hard-Wired for Social Networking

A “social network” is how we describe a collection of individuals and their connections. As one of the most socially evolved animals on the planet, humans form networks without even thinking about it. Consider some of the networks you’re involved in—friends, acquaintances, co-workers, and family members—who you’re connected to through mutual interests, frequented locations, employers, and most basically your DNA. These networks are not only healthy, but essential for our survival and wellbeing.

Figure 1. Social networks: good at growing.

One would think, then, that a platform designed to foster human connection on a global scale would largely improve social wellbeing. Of course, for many this is arguably the case. Facebook allows users to connect to anyone with internet access through features like Messenger and groups. It has even helped reconnect long-lost family members and friends. Even CASSE has its own Facebook page to stay in touch with steady staters across the globe! The problem with Facebook, then, lies not in social networking itself, but in how the company manipulates networks for the sake of expansion.

The Growth Goal and Execution

Much can be learned about a community and its individuals by studying their connections and how those connections change over time. Facebook has been used as a tool to illustrate this through studies such as how individuals’ preferences in movies, books, and other media evolved over time. By studying the interests of individuals and the interests of their peers in a small university group on Facebook, researchers were able to accurately predict new connections and interests people would likely explore. The key insight is that the more information gathered about a person’s connections, the more accurately one can predict what they might like next. Therefore, adding more individuals to the network and introducing more ways for them to connect augments the information about those already there.

Facebook has mastered the process of capitalizing on this phenomenon. When its user count plateaued around 90 million in 2007, Facebook created a special growth team for the sole purpose of bringing as many people onto the site as possible. The growth team developed the “People You May Know” feature, whereby Facebook makes connection suggestions based on the data compiled on a user, nudging users to increase their connections. Users quickly discovered the eerie nature of the feature, as questionable suggestions appeared and caused many to wonder: What information is Facebook using to make these recommendations and how are they getting that information?

No one knows for sure all the sources Facebook uses to make these suggestions. We do know, however, that for every new person added to the network and every new connection made, Facebook’s software gleans increasingly more information about existing members. Its algorithms then use that information to target you with ads, content, and connection suggestions that you are most likely to engage with to keep you on the site. The more time and engagement you have, the more connections you tend to make. The whole process is a continuous feedback loop designed to continually “maximize the system.”

Facebook’s growth plan worked better than anybody ever imagined, and the algorithm used to learn about people and manipulate content is a genius invention of its top-tier development team. However, like many technologies, the purpose it serves changes with the intent of the user. The algorithm’s main function is to feed content to users based on their “friends” and previous interests, but in the wrong hands, this tool proves deadly.

Mark Zuckerberg Meets Rich Uncle Pennybags

In the company’s quest to grow, it has increasingly diversified the means of connection on its platform, exemplifying what many consider monopolistic behavior. A monopoly occurs when a single seller can set market prices because it faces little to no competition. Monopolies are disastrous for consumers because the lack of competition allows monopolists to restrict market output and artificially increase prices. This reduces consumer surplus and economic welfare, which is why monopolies have been illegal in the USA since the passage of the Sherman Anti-Trust Act.

Rich Uncle Pennybags, known to many as “the Monopoly man.” (CC BY-NC-ND 2.0, Sean Davis)

If a service like Facebook is free, can it really have a monopoly? The answer is yes, and the consequences go far beyond price gouging.

Facebook’s monopolistic behavior first began with its billion-dollar acquisition of Instagram in 2012, when executives identified the rival platform as a threat. Instagram, at the time, only allowed image sharing with followers, who could then like and comment. Facebook also had these capabilities, making the two platforms natural competitors. By integrating Instagram with Facebook’s network, Facebook acquired the portion of Instagram’s userbase that wasn’t on Facebook and captured the competition’s advertising revenue. Instagram now brings in $20 billion annually in advertising for Facebook, about a third of Facebook’s revenue.

Facebook’s next competitor purchase was the international messaging platform WhatsApp in 2014 for $19 billion. WhatsApp provided a secure, highly encrypted means of communication from anywhere in the world with internet access, an indispensable service in today’s global ecosystem. Facebook had its own messaging service that, by 2013, was outcompeted by WhatsApp in Europe and elsewhere, making the acquisition a no-brainer. The merge offered Facebook hundreds of millions of new users along with a well-developed, multi-functional messaging infrastructure that could even be used to transfer money. WhatsApp solidified Facebook’s growth into a multiplatform conglomerate with an astounding amount of power—and responsibility.

At this point it’s fair to say that Facebook is a monopoly. They have no direct competitors in the sense that no other social media company provides as many services as they do. As a result, individuals who rely on Facebook services, such as messaging and news, are vulnerable if Facebook should fail. A prime example of this occurred on October 4th, when Facebook experienced a service outage that lasted almost six hours. With Facebook servers down for much of the day, all Facebook, Instagram, and WhatsApp users were without access to messaging, content, or news with no viable alternative. This is devastating for the billions of individuals who rely on these services worldwide for a myriad of communication needs.

However, the consequences of Facebook’s monopolistic growth go beyond power outages. The platform has grown so large that it is unable to properly moderate and filter misinformation, leading to the deaths of millions across the globe.

A Lesson from Uncle Ben

If Facebook’s monopolizing and ethically questionable information gathering don’t call to mind the mantra, “with great power comes great responsibility,” then you either haven’t seen Spider-Man or you might be an authoritarian. This nugget of wisdom from Peter Parker’s Uncle Ben applies to all entities that wield immeasurable power, from the U.S. government to the billionaires it has helped create, Zuckerberg included. With Facebook’s tremendous growth came tremendous power, and consequentially great responsibility.

But Facebook has historically adopted a laissez-faire approach to this responsibility, particularly when it comes to how it handles spreading disinformation on its platform. Take, for example, how Facebook facilitated cases of Russian interference in the 2016 presidential election and attacks on parliamentary elections throughout Europe. Though the company denies any role in the January 6th insurrection of the U.S. Capitol, its own internal reports reveal that a small, coordinated effort of super-sharing individuals caused many individuals to storm the U.S. Capitol, many with the intent to capture and kill elected representatives.

Former Facebook employees continue to expose the company’s poor handling of dangerous situations, particularly in developing countries. After removing thousands of inauthentic, propagandist accounts pushing the authoritarian Juan Orlando Hernandez of Honduras and devoting her spare time to combating misinformation accounts in various countries (including the UK and Australia), former data scientist Sophie Zhang was fired from Facebook for devoting too much time to policing civic engagement. The most recent whistleblower, Frances Haugen, claimed that Facebook phased out efforts to combat disinformation, with potentially deadly consequences.

In Myanmar, Buddhist citizens and the military engaged in a genocidal campaign of the Muslim Rohingya minority from 2016 to 2017 that claimed almost 750,000 lives. Throughout the campaign, fake news, hate speech, and other misinformation spread across Facebook, especially in Myanmar where 38 percent of citizens get their news through the site. Not only did it take two years for many of the accounts to be removed, Facebook also refused to share both user data and the measures it took to address the problem with international investigators. If one genocide wasn’t enough, Facebook seems to be repeating history with the situation in Ethiopia where murderous riots inspired by ethnic hate speech on Facebook have already claimed the lives of over 80 minority citizens.

Facebook is a case study in how the obsession with growth—whether of a nation or a social media monopoly—has dire implications for national security and international stability.

Channeling Taft and Roosevelt: Break Them Up

Mark Zuckerberg preaching the religion of growth. (CC BY-NC 2.0, Niall Kennedy )

Katherine Losse, Mark Zuckerberg’s one-time speech writer, best summarizes Facebook’s growth mindset in her memoir when she says, “Scaling and growth are everything; individuals and their experiences are secondary to what is necessary to maximize the system.” The company’s religion of growth has been so successful that it has engulfed competitors and grown beyond its means to monitor and control the content spreading across its network (even if it wanted to). Though Facebook has successfully connected people around the globe and circulated some important information throughout the pandemic, the toll it has taken on democracy has exceeded its advantages. Its sheer size threatens not only the USA, but the world as well.

Anti-trust legislation was the solution to adverse conditions arising from trusts like Standard Oil and U.S. Steel. These companies drove competitors out of business by temporarily cutting prices below cost, which ultimately concentrated wealth into the hands of a few who wielded this wealth to influence American politics in their favor. Thankfully, presidents Theodore Roosevelt and William Taft brought the hammer of justice down on the heads of John D. Rockefeller and J.P. Morgan, successfully breaking them up. Now Biden’s justice department is poised to do the same to Facebook.

Without proper oversight or the ability for users to readily disengage, Facebook will continue to expand beyond its limits of control. If the past is any indication, there is bound to be more bloodshed. Let’s hope Uncle Sam is still big enough to keep the peace.

Taylor Lange is CASSE’s ecological economist, and Ph.D. of Ecology and Environmental Science.

Taylor Lange is CASSE’s ecological economist, and Ph.D. of Ecology and Environmental Science.

"General Audience with Pope Francis" by Catholic Church (England and Wales) is licensed under CC BY-NC-SA 2.0

"General Audience with Pope Francis" by Catholic Church (England and Wales) is licensed under CC BY-NC-SA 2.0

It’s survival of the fittest in the business world. Like Microsoft has previously done, Facebook is swotting (S.W.O.T.) aside any future threat by buying up all of the opposition. Growth and profit get promotion in all profit driven environments, a culture of responsibility would weaken the entities fitness to survive. Responsibility, just another a cost to minimise ?

So what’s the alternative to the big monopoly. We enjoy the benefits of the social connection and access to the big global noticeboard however there’s a monopoly and lack of policing on the network. To break up the monopoly and have different companies bidding for licences to operate parts of the network, (like rail or mobile phone networks) will not incentivise more corporate responsibility. More likely less responsibility as multiple companies would shirk responsibility blaming one another.

The only solution is for the consumers to revolt. Let me paint a scenario. Lets say Greenpeace partner with the gates foundation to build an alternative cooperatively modelled social network. Consumers enhance their social reputations by using the new network. The consumers direct their purchasing through the network and build their personal profiles and reputations among one another by demonstrating an ethical consumption behaviour. Additional reputational points for directing purchases to cooperatives. So the network morphs into a cooperative driving force. Consumerism discouraged, a talent exodus from corporates to a growing market dominated by cooperatives. The cooperatives compete to deliver sustainability and fairness and support democracy. Humanity focuses less on consumerism and more on having sustainable fair living.

Hi Connor,

Thanks for your reply! You bring up some interesting points.

I’m not sure I agree with you on responsibility being just another cost to be minimized. While it’s true that it can be a cost, companies that do enact social responsibility such as b-corps are becoming more successful because many consumers are willing to pay a premium for their products to be sourced from a socially and environmentally responsible company. The costs of responsibility in many cases can be outweighed by its benefits, especially if they are effectively communicated to consumers.

I also think that breaking up the monopoly makes it so the company is not a one stop shop anymore, which makes it so other social networks, such as the one you mentioned, can more effectively compete in the marketplace.

In relation to breaking up facebook to remove the monopoly, we have the challenge of leaving the big seamless social connection intact – a challenge which could probably be met. I agree that its all about tweaking the market so that operators are responsible. B-corps a step along the way to that, communicating their positive responsibility to attract demand in their direction.

An ideal scenario for me being one in which consumers voluntarily build visible online reputations when they consume responsibly mirrored with coooperative type suppliers building a reputational score when they deliver responsibly, an environmental/social trust pilot score. When these market forces are in equilbrium we get steady state.

Many thanks for this Taylor. Even though I work in technology, and despise Facebook, I’d never made the connection between its metastasizing behavior and an underlying addiction to growth at any cost. It’s pretty clear now!

I’m glad you enjoyed the post Cole! I’ve been watching Facebook for a while and the behavior of their executives is really troubling. The growth is poised to bury us all.